eVTOL Design, Simulation, and Fabrication

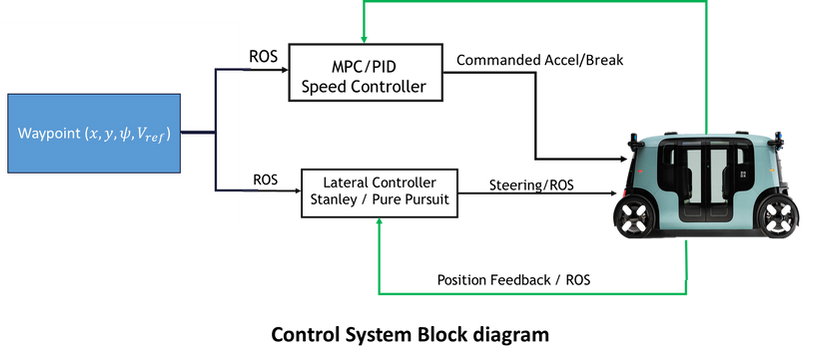

Autonomous shuttle bus Control System Design (MPC+ Pure pursuit)

System Requirements :

Proposed Software Architecture

Real time experimental results

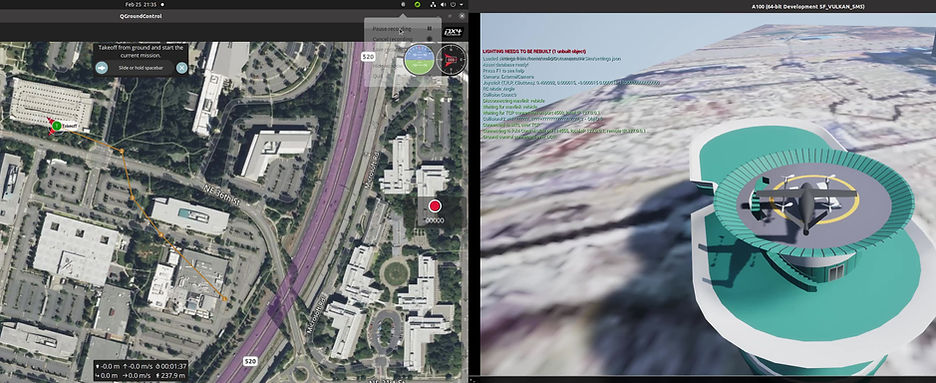

eVTOL: Design, Control and Fully Autonomous Mission (KUAM Challenge ) Silver Price.

Design Modeling and Control Of an Multirotor UAV

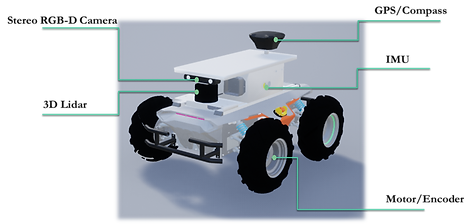

Autonomous Delivery Robot

Deep Reinforcement Learning for Autonomous Map-less Navigation of a Flying Robot

Flying robots are expected to be used in many tasks, like aerial delivery, inspection inside dangerous areas, and rescue. However, their deployment in unstructured and highly dynamic environments has been limited. This paper proposes a novel approach for enabling a Micro Aerial Vehicle (MAV) system equipped with a laser rangefinder and depth sensor to autonomously navigate and explore an unknown indoor or outdoor environment. We built a modular Deep-Q-Network architecture to fuse information from multiple sensors mounted onboard the vehicle. The developed algorithm can perform collision-free flight in the real world while trained entirely on a 3D simulator. Our method does not require prior expert demonstration or 3D mapping and path planning. It transforms the fused sensory data to a velocity control input for the robot through an end-to-end Convolutional Neural Network (CNN). The obtained policy was compared in simulation with the conventional potential field method. Our approach attains zero-shot transfer from simulation to real-world environments that were never experienced during training by simulating realistic sensor data. Several intensive experiments were conducted to show our system’s effectiveness in flying safely in dynamic outdoor and indoor environments.

Transfer Learning Based Semantic Segmentation for 3D Object Detection from Point Cloud

Three-dimensional object detection utilizing LiDAR point cloud data is an indispensable part of autonomous driving perception systems. Point cloud-based 3D object detection has been a better replacement for higher accuracy than cameras during nighttime. However, most LiDAR-based3D object methods work in a supervised manner, which means their state-of-the-art performance relies heavily on a large-scale and well-labeled dataset, while these annotated datasets could be expensive to obtain and only accessible in the limited scenario. Transfer learning is a promising approach to reduce the large-scale training datasets requirement, but existing transfer learning object detectors are primarily for 2D object detection rather than 3D. In this work, we utilize the 3D point cloud data more effectively by representing the birds-eye-view (BEV) scene and propose a transfer learning-based point cloud semantic segmentation for 3D object detection. The proposed model minimizes the need for large-scale training datasets and consequently reduces the training time. First, a preprocessing stage filters the raw point cloud data to a BEV map within a specific field of view. Second, the transfer learning stage uses knowledge from the previously learned classification task(with more data for training) and generalizes the semantic segmentation-based 2D object detection task. Finally, 2D detection results from the BEV image have been back-projected into 3D in the postprocessing stage. We verify results on two datasets: the KITTI 3D object detection dataset and the Ouster LiDAR-64 dataset, thus demonstrating that the proposed method is highly competitive in terms of mean average precision (mAP up to70%) while still running at more than30frames per second (FPS)

Nonlinear Model Predictive Control for Self-Driving cars Trajectory Tracking in GNSS-denied environments

n this study, the hardware, and software design and implementation of an autonomous electric vehicle are addressed. We aimed to develop an autonomous electric vehicle for path tracking. Control and navigation algorithms are developed and implemented. The vehicle is able to perform path-tracking maneuvers under environments in which the positioning signals from the global navigation Satellite System (GNSS) are not accessible. The proposed control approach uses a modified constrained input-output nonlinear model predictive controller (NMPC) for path-tracking control. The proposed localization algorithm used in this study guarantees almost accurate position estimation under GNSS-denied environments. We discuss the procedure for designing the vehicle hardware, electronic drivers, communication architecture, localization algorithm, and controller architecture. The system’s full state is estimated by fusing visual-inertial odometry (VIO) measurements with wheel odometry data using an extended Kalman filter (EKF). Simulation and real-time experiments are performed. The obtained results demonstrate that our designed autonomous vehicle is capable of performing path-tracking maneuvers without using Global Navigation Satellite System positioning data. The designed vehicle can perform challenging path tracking maneuvers with a speed of up to1 m/s.

Deep Reinforcement Learning-based ROS-Controlled RC Car for

Autonomous Path Exploration in the Unknown Environment

Nowadays, Deep reinforcement learning has become the front runner to solve problems in the field of robot navigation and avoidance. This paper presents a LiDAR-equipped RC car trained in the GAZEBO environment using the deep reinforcement learning method. This paper uses reshaped LiDAR data as the data input of the neural architecture of the training network. This paper also presents a unique way to convert the LiDAR data into a 2D grid map for the input of training neural architecture. It also presents the test result from the training network in different GAZEBO environments. It also shows the development of hardware and software systems of embedded RC cars. The hardware system includes Jenson AGX Xavier, teensyduino and Hokuyo LiDAR; the software system includes- ROS and Arduino C. Finally, this paper presents the test result in the real world using the model generated from training simulation

Semantic Segmentation-based Lane Keeping Assist System for

Autonomous Driving

In the era of smarter technology, human-machine interaction plays an important role. With the advancement of speech technology, it has come to human life with systems, robots, and now in UAVs, making all of them smarter. But a low-cost, real-time, and adaptive voice-based control system is still a dream. This paper tries to develop a voice-based control system based on open source reducing the production cost with zero maintenance cost. It uses HMM-based speech recognition with speaker adaptation and applies control logic for controlling a UAV.

Real-Time Deep Learning for Moving Target Detection and Tracking

Using Unmanned Aerial Vehicle

Real-time object detection and tracking are cru- cial for many applications such as observation and surveil- lance, search-and-rescue. Deep learning techniques for object detection and tracking have recently been qualitatively stepped forward, since the successful advancement of computing devices. Based on these ideas, the YOLO deep learning visual object detection algorithm has been used to visually guide the UAV to keep tracking the detected target. The detected target bounding box and the image frame center are the only information that is used to control the forward motion, heading, and altitude of the vehicle. The control system approach consists of two PID controllers to manage the heading and altitude rates. Using an Nvidia Jetson TX2 Graphical processing unit, a novel system is introduced that operates entirely onboard the UAV, without external localization sensors or GPS, it uses a fisheye camera to perform a visual SLAM for localization. The robustness of the vision algorithm was tested in simulation and real-time experiments. The supplementary videos can be accessed at the following link : http://bit.ly/2HwZaH4

Deep Reinforcement Learning for END-To-END Local Motion Planning

of Autonomous Aerial Robots in Unknown Outdoor Environments:

Real-Time Flight Experiments

Autonomous navigation and collision avoidance missions represent a significant challenge for robotics systems as they generally operate in dynamic environments that require a high level of autonomy and flexible decision-making capabilities. This challenge becomes more applicable in micro aerial vehicles (MAVs) due to their limited size and computational power. This paper presents a novel approach for enabling a micro aerial vehicle system equipped with a laser range finder to autonomously navigate among obstacles and achieve a user-specified goal location in a GPS-denied environment, without the need for mapping or path planning. The proposed system uses an actor–critic-based reinforcement learning technique to train the aerial robot in a Gazebo simulator to perform a point-goal navigation task by directly mapping the noisy MAV’s state and laser scan measurements to continuous motion control. The obtained policy can perform collision-free flight in the real world while being trained entirely on a 3D simulator. Intensive simulations and real-time experiments were conducted and compared with a nonlinear model predictive control technique to show the generalization capabilities to new unseen environments, and robustness against localization noise. The obtained results demonstrate our system’s effectiveness in flying safely and reaching the desired points by planning smooth forward linear velocity and heading rates

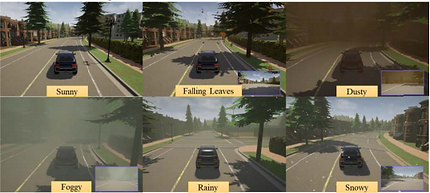

Driverless Car: Autonomous Driving Using Deep Reinforcement Learning In Urban Environment

Deep Reinforcement Learning has led us to newer possibilities in solving complex control and navigation-related tasks. The paper presents Deep Reinforcement Learning autonomous navigation and obstacle avoidance of self-driving cars, applied with Deep Q Network to a simulated car in an urban environment. The approach uses two types of sensor data as input: camera sensor and laser sensor in front of the car. It also designs a cost-efficient high-speed car prototype capable of running the same algorithm in real-time. The design uses a camera and a Hokuyo Lidar sensor in the car front. It uses embedded GPU (Nvidia-TX2) for running deep-learning algorithms based on sensor inputs.

Neural Network-based Robust Adaptive Certainty Equivalent Controller for Quadrotor UAV with Unknown Disturbances

In this paper, a robust adaptive neural network certainty equivalent controller for a quadrotor unmanned aerial vehicle is proposed, which is applied in the outer loop for position control to directly generate the desired roll and pitch angles commands and then to the inner loop for attitude control. The newly proposed controller takes into account the vehicle’s kinematic and modeling error uncertainties which are associated with external disturbances, inertia, mass, and nonlinear aerodynamic forces and moments. The control method integrates an adaptive radial basis function neural networks to approximate the unknown nonlinear dynamics with a certainty equivalent control technique, in this way leading to the fact that precise dynamic model and prior information of disturbances are not needed. The adaptation law was derived by using a Lyapunov theory to verify the stability and superiority of the new algorithms. The performance and effectiveness are also verified by carrying out several simulations. It was shown from the analysis that the altitude, position, and attitude tracking errors are converged to zero and the closed-loop stability is guaranteed under extreme conditions.

Live Multi-Target Detection Using Single Camera-Equipped Unmanned Aerial Vehicle

A short or long period of observation from bird's eye view and environment Monitoring is a very crucial job nowadays. Urban wilderness search and feedback response require a large number of sensors, a wide range of work, and processing power for a huge region of an environment. An unmanned aerial vehicle mounted with a single camera from the position to observe the bird's eye view can be a promising platform that offers aerial image and can the alternate solution of remote monitoring. Due to cost and weight limitations associated with UAVs payload, confining to camera-based technologies are the feasible choice. This paper presents real-time multi-object detection and tracking algorithm from a UAV camera using both onboard devices and distant ground stations. Later, the comparison between the tracking from onboard and ground station is offered in the paper. The system was tested with multiple trials in the outdoor environment, from heights of 3 m to 30 m. It can detect objects from closer distances like less than 1 meter to a longer distance of 50 meters. The comparison and results show that the system is highly dependable and robust.

Global Fast Terminal Sliding Mode Control for Quadrotor UAV

This paper presents the mathematical model of the quadrotor unmanned aerial vehicle (UVA) and then proposes a Global Fast Terminal Sliding Mode Controller to achieve trajectory tracking capability, the control system is decoupled into two parts, inner loop for attitude control and an outer loop for position control, the stability analyses for the proposed controller is proven via Lyapunov stability theory. Simulations are conducted to demonstrate the effectiveness of the designed global fast terminal sliding mode controller comparing it with the regular sliding mode control.

Intelligent Controller Design for Quad-Rotor Stabilization in Presence of Parameter Variations

The paper presents the mathematical model of a quadrotor unmanned aerial vehicle (UAV) and the design of a robust Self-Tuning PID controller based on fuzzy logic, which offers several advantages over certain types of conventional control methods, specifically in dealing with highly nonlinear systems and parameter uncertainty. The proposed controller is applied to the inner and outer loop for heading and position trajectory tracking control to handle the external disturbances caused by the variation in the payload weight during the flight period. The results of the numerical simulation using gazebo physics engine simulator and real-time experiment using AR drone 2.0 testbed demonstrate the effectiveness of this intelligent control strategy which can improve the robustness of the whole system and achieve accurate trajectory tracking control, comparing it with the conventional proportional integral derivative(PID)

Voice Enabled Smart Drone Control

In the era of smarter technology, human-machine interaction plays an important role. With the advancement of speech technology, it has come to human life with systems, robots, and now in UAVs, making all of them smarter. But a low-cost, real-time, and adaptive voice-based control system is still a dream. This paper tries to develop a voice-based control system based on open source reducing the production cost with zero maintenance cost. It uses HMM-based speech recognition with speaker adaptation and applies control logic for controlling a UAV.